Hampshire AI April 2025 - Accountable AI

06 May, 202510 Minutes

We recently held the second meeting of our Hampshire AI networking and discussion group, after a very successful launch earlier this year. With more than 45 people in the room, it was a dynamic and educational evening, offering the chance to discuss important issues that are impacting AI development currently, as well as converse and collaborate with other IT professionals from a broad range of industries in the local area and with diverse skillsets and experience.

We were joined by Michael Davey, Technology and Operations at Signly, a service to add sign language to websites to improve accessibility. Since 2017, Signly has had a singular vision: to deliver sign language everywhere. They have been integrated into numerous websites, where webpage content is captured by Signly, then translated and recorded by proficient deaf translators, and integrated into the webpage. Of the 48 registered translators in the UK, 9 of them work with Signly.

Michael is well placed to talk about the development of AI, and he delivered an engaging talk around the two main paths AI can take: a faster but lightly regulated approach, which may throw up ethical or societal challenges further down the line; or a more accountable, regulated model, offering longer-term stability but slowing down innovation. He also explored standardisation, regulation, trustworthiness and responsible AI development. The topic generated a lot of insightful conversation among attendees on the night.

The growth of AI and ethical considerations

Michael opened the evening’s talk with a look back at the development of AI and how fast it’s happened. “If you had told me five years ago that between 2020 and 2025 AI would reshape civilisation, disrupt fundamental structures of power, knowledge and identity, and would become one of the biggest strategic challenges for technology leaders, I wouldn’t have believed you,” he begins.

In 2020, we were only just beginning to come to terms with COVID-19 and the implementation of widespread remote working practices. At this time, AI wasn’t a household conversation. It was something that researchers, data scientists and a handful of cutting-edge startups were working on, but it wasn’t a concern for most people, and we didn’t have any idea just how much impact it was going to have. Fast forward to today, and AI is now mainstream, integrating into so many different aspects of people’s lives.

Michael explained that in just five years, AI has become the ‘strategic challenge of our time’: “In 5-10 years, it’s going to be all over in terms of this first phase of getting AI out into society in large numbers. Just as the golden age of computing reshaped the world in the mid-20th century, AI is experiencing its golden age today. And while we probably can all agree on what’s happening that’s affecting all of us, we might not be totally aligned on how to best take advantage of the opportunities.”

To give context to the challenges facing AI professionals, Michael presented a brief history of computing. He explored the golden age of computing, including the 1940s-1960s, one of the most revolutionary, intense, and innovative periods in computing history. But, he reminded us, all these advancements happened independently and organically, driven by the availability of new electrical engineering techniques.

While the growth of the internet has been fast, AI adoption in 2020-2025 has been more than 10x faster. Not only that, AI is more than a tool: it’s actively performing, replacing and has some cognitive function. And with that comes a certain sense of responsibility, something that’s not a new idea. Joseph Weizenbaum who wrote Computer Power and Human Reason (1976), laid the foundation for the modern ethical AI movement, advocating for AI regulation, human oversight and caution in allowing AI to make consequential decisions.

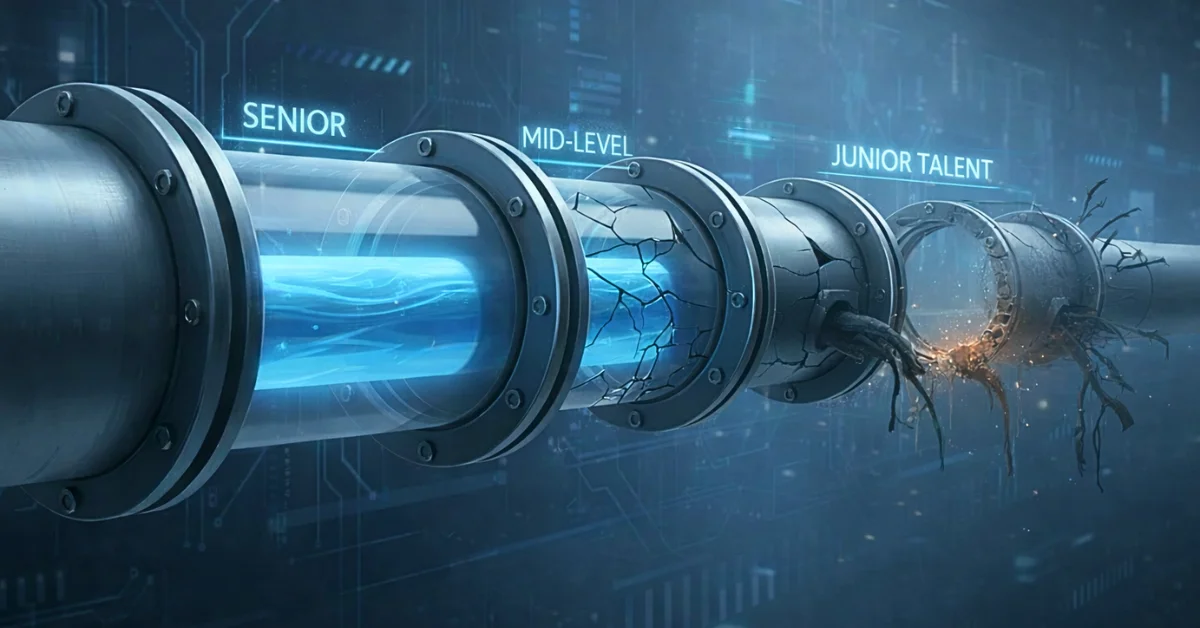

Now is the time when the future of AI is being shaped. Something that Signly has been considering is how do you do AI ethically? Which comes back to that initial dilemma of the choice that AI companies face:

- Lightly regulated AI – Move fast, break things, gain short-term market share, and deal with the legal fallout later.

- Accountable AI – Build trust, secure long-term partnerships, create a regulatory moat, and establish AI governance before regulators force it upon you.

Michael explained that while lightly regulated AI might have short term gains, the long-term trajectory suggests a regulated, ethical AI as the norm going forwards. As AI failures inevitably emerge, consumer trust will decline, enforcing new regulations and laws, and an increase in demand for trustworthy and ethical AI products. “Ethical AI companies that can balance innovation with governance are likely to have the upper hand, being the most trusted, sustainable and commercially viable players, leading to resilience and long-term market dominance.”

Michael gave some practical advice and examples around the challenges, risk and implications that AI professionals and companies face. He asked the audience to imagine they are a solution architect evaluating two cloud technologies, which are broadly equivalent in most ways, except one is 10x faster. The natural impulse might be to pick the one that delivers fastest, but there are other aspects to consider beyond the technological. One of these is financial impact and risk: if you land the business with a large bill they weren’t expecting, this can reflect on your ability to do your job competently. Similarly, you need to consider the safety and societal risk of the product, and whether it infringes on the rights of the users; by not considering these risks you might be judged to be incompetent, negligent or even criminally liable. And, as one audience member rightly pointed out, the risks are not just financial; both brand and reputation can be at risk too.

Future developments in AI

Michael presented information on the biggest changes happening the industry right now: spatial computing, meaning systems that interact with the physical world and simulate aspects of the real world; and quantum computing, the next big leap forward from classical computing to harnessing superposition and entanglement to solve problems, using non-Boolean quantum gates. “We’re seeing the start of a convergence between cloud, accelerated computing including GPUs, NPUs, neuromorphic, quantum, large generative models including GenAI, 3D Engines and Gaussian Splatting. That creates new opportunities. We call this ‘converged digital infrastructure’,” Micheal explains.

He also talked about the development of AI engineering and how to marry that with ethics. He says that it’s important to consider ethics as a base from which AI is built, with moral principles and values guiding the design, thinking about responsible and safe AI, from its inception and throughout its lifespan. This also includes having an effective governance system that is improved on an ongoing basis, and how to ensure accountability for this governance at an individual level.

To help put this in perspective, Micheal explained how this looks in real terms at Signly:

- Signing up to the EU AI Alliance and AI Pact

- Figuring out what the key risks are for AI in our industry vertical.

- Writing guidelines that defined the permitted and prohibited uses of AI

- Crafted an AI policy and framework based on standard risk management and ethics frameworks

- Defined what Informed Consent looked like

- Continuously engaged stakeholders to test our understanding of the appropriateness of each

- Adopting robust governance mechanisms in the form of ISO 42001

- Currently creating a community of practice for individuals and organisations that are undertaking ISO 42001

Addressing the AI professionals present, Michael spoke about the importance of trustworthiness and how it’s a barrier to AI adoption, but these barriers can be removed with good governance. “If poor regulation can hinder innovation, good regulation can also promote it.”

By a show of hands, the room was asked which companies or individuals took a lightly regulated or accountable AI approach; with only one person admitting to a lightly regulated approach. However, when asked who has an AI statement on their website, only four hands were raised.

At this point, there was some engaging discussion and questions from the audience. One attendee asked how we could move past the challenges of trust with AI in the UK, to which Micheal explained that this comes down to promoting AI literacy and doing it well, incorporating the regulations of the EU AI Act and the NIST (National Institute of Standards and Technology in the USA) risk management framework for AI. Conversations bounced around the room, exploring topics like identifiable information and GDPR implications, the origins of data and grey areas around compliance. There was also talk about the laws and rules that are coming in for AI globally, but Michael stressed the point that each company should have their own policies and regulations as well.

Michael rounded off the evening with a call to action, asking us all to build AI that people can trust, that lasts and that makes history.

If you’re interested in coming to our next event or finding out more, please join our Hampshire AI LinkedIn group and we hope to see you soon.